What image thumbnails are, and why do we need them?

We see the term ”thumbnail” more and more often in marketing and the online world. So what’s so important about it?

A thumbnail is a preview image. The thumbnail is usually a smaller-sized version of a large image. Hence, the term thumbnail. Thumbnails are used to manage file overviews but also in online marketing to give first insights.

They are also placeholders for multi-media content such as videos and are used to gain an insight/preview of what is hidden in the rich media file (video, podcast, eBook, etc.).

The small file size also makes it possible to optimize valuable resources such as page speeds to allow faster loading of the content.

The thumbnail is the first thing you see in a search engine (YouTube is also a search engine) or on social media. It is also the portal to the rest of the page content. For this reason, using thumbnails can be very beneficial to the success of your website.

Hence, thumbnails are important influencers of marketing results, whether of organic or paid efforts. Thumbnails increase or – as a matter of fact – decrease click-through rates (CTR), post-click engagements, and ultimately, campaign performance.

Let’s take a YouTube thumbnail as an example. If your video doesn’t get the click-through rate of comparable videos, YouTube won’t promote it as much. Resulting in low views and further poor performance. On the flip side, a great thumbnail boosts CTRs and results in more views and great engagement rates, leaving YouTube’s algorithm no choice other than to promote it to a wider audience.

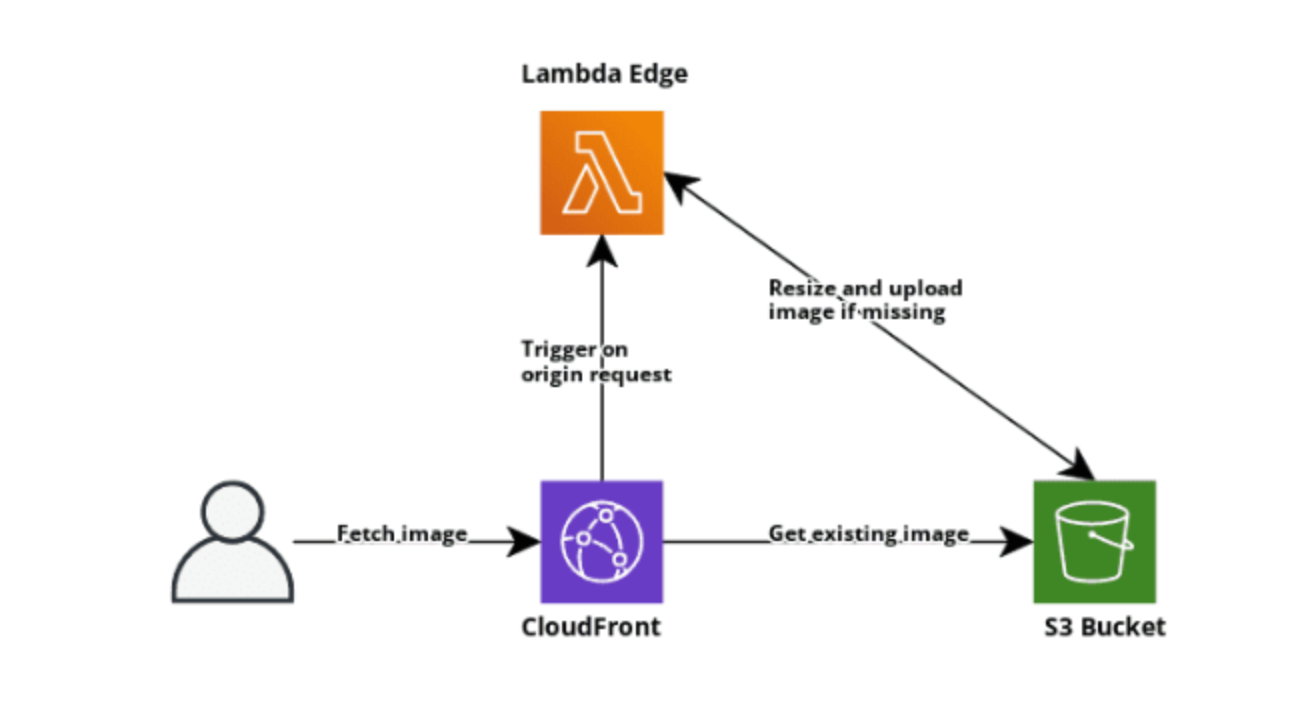

What will the infrastructure look like:

What is CDN

CDN (Content Delivery Network) is a geographically distributed network infrastructure that provides fast delivery of content to users of web services and websites. The servers included in the SVT are geographically located in such a way as to make the response time for users of the site/service minimal. Image resizing lambda

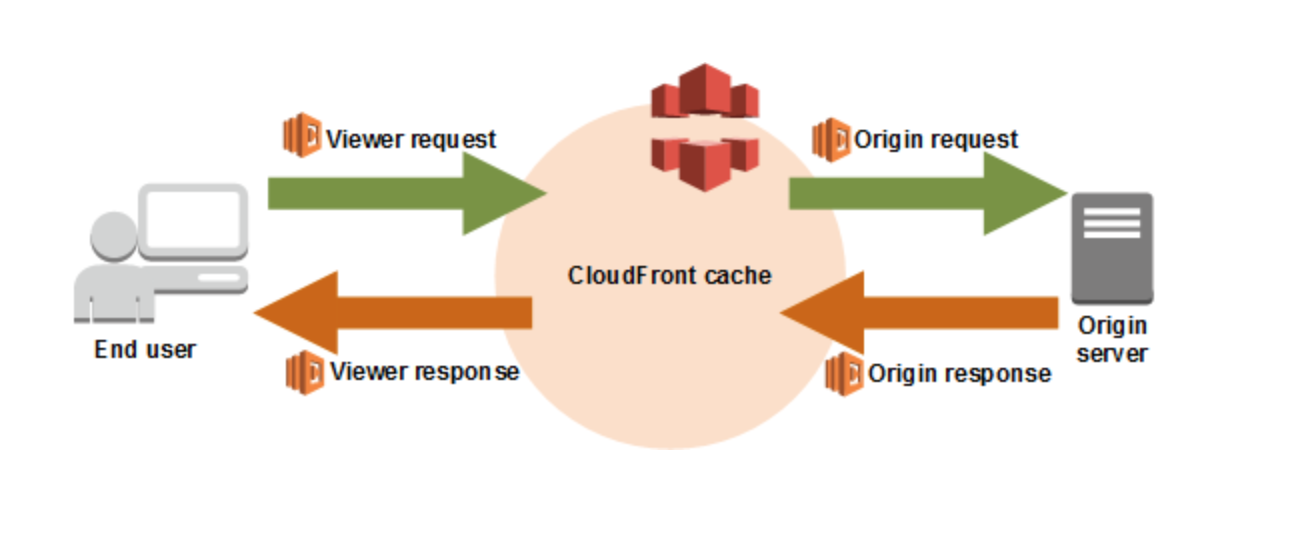

Amazone Web Services and CloudFront allow us to customize served content with Lambda Edge. There are 4 points where we can use Lambda to change CloudFront request/response:

Viewer request - executed every time CloudFront receives a request from the user

Origin request - executed before forwarding the request to S3 only if the served file is not in CloudFront cache (we will use this one)

Origin response - executed after CloudFront receives a response from S3 (will be cached)

Viewer response - executed before CloudFront forwards the response to the user

AWS Lambda code

We will use NodeJS with TypeScript to write type-safe code. Image processing will be handled by a sharp library which has great performance (using libvips under the hood). Images will be transcoded into webp type

WebP is a file format developed by Google in 2010. Its feature is an advanced compression algorithm that allows you to reduce the image size without visible loss in quality.

Yes, other formats also support compression, but the technologies underlying WebP are much more advanced. If we compare WebP with competitors in the ratio of compression ratio to image quality, then Google's development will win a landslide victory.

On average, the weight of images is reduced by 25-35%, which allows webmasters to place more images on websites without wasting precious space on the hard disk of a rented VDS.

When developing the format, Google employees used the same compression techniques that are used in the compression of VP8 codecs.

Naturally, the main advantage is the size. Reducing the size has a positive effect on four aspects of working on the Internet at once:

Sites with compressed WebP images work faster. It takes less time to process small files. Even if there are under a hundred images in the article, compression will save you from too-long downloads.

By uploading small images to VDS, you can save on hard disk space.

Users will spend less mobile traffic when visiting the site from a smartphone.

The dedicated Internet channel to the server will be loaded much less if the transmitted media content weighs less. Another plus to performance.

But it is easier to talk about the advantages of the Web in comparison with other formats.

import { PassThrough, Readable } from 'stream'; import sharp from 'sharp'; import type { CloudFrontRequestHandler, CloudFrontRequestEvent } from 'aws-lambda'; import { S3Client, HeadObjectCommand, NotFound, GetObjectCommand, GetObjectCommandOutput, NoSuchKey } from '@aws-sdk/client-s3'; import { Upload } from "@aws-sdk/lib-storage"; // LambdaEdge cannot be deployed with environment variables, region has to be hardcoded or can be filled on code build time const s3Client = new S3Client({ region: 'eu-central-1' }); const allowedDimensions = ['50x50', '200x200']; // we allow to create thumbnails only in hard defined dimensions const matchUriRegExp = new RegExp(`^/(.*)-(${allowedDimensions.join('|')})$`); const doesObjectExists = async (bucket: string, key: string): Promise<boolean> => { try { await s3Client.send(new HeadObjectCommand({ Bucket: bucket, Key: key })); return true; } catch (err) { if (err instanceof NotFound) { return false; } throw err; } }; const getObject = async (bucket: string, key: string): Promise<null | GetObjectCommandOutput> => { try { return await s3Client.send(new GetObjectCommand({ Bucket: bucket, Key: key })); } catch (err) { if (err instanceof NoSuchKey) { return null; } throw err; } } export const handler: CloudFrontRequestHandler = async (event: CloudFrontRequestEvent) => { const { request } = event.Records[0].cf; // check if request includes dimensions (there's no need to run resizing for fetching original image) const matchedUri = request.uri.match(matchUriRegExp); if (matchedUri === null) { return request; } const s3DomainName = request.headers.host[0].value; const bucket = s3DomainName.split('.')[0]; const requestedKey = request.uri.substring(1); // check if resized image already exists, if so pass request to S3 if (await doesObjectExists(bucket, requestedKey)) { return request; } const [, originalKey, dimensions] = matchedUri; const [width, height] = dimensions.split('x'); const originalImage = await getObject(bucket, originalKey); // if original image to resize does not exist pass req to S3 (which will handle not found error) if (!originalImage) { return request; } // use streaming to handle downloading, resizing and uploading resized file to S3 const resizeStream = sharp() .rotate() .resize({ width: Number(width), height: Number(height), fit: sharp.fit.inside, withoutEnlargement: true, }) .webp(); const passThrough = new PassThrough(); const upload = new Upload({ client: s3Client, params: { Bucket: bucket, Key: requestedKey, Body: passThrough, ContentType: 'image/webp', }, }); (originalImage.Body as Readable).pipe(resizeStream).pipe(passThrough); await upload.done(); // pass request to S# when uploading of resized image is finished return request; };

When our code is ready we have to prepare it to be deployed on lambda. We will build it and compress it into a zip package. Here is the package.json, which includes the required packages and script for creating the release zip:

{ "name": "image-resize", "private": true, "engines": { "node": "^16.15.0", "npm": "^8.5.0", "yarn": "please-use-npm" }, "scripts": { "prebuild": "rimraf dist", "build": "tsc", "install-prod-deps": "SHARP_IGNORE_GLOBAL_LIBVIPS=1 npm ci --production --arch=x64 --platform=linux --libc=glibc", "create-release-zip": "npm ci && npm run build && rimraf release.zip && npm run install-prod-deps && zip -r -q release.zip dist node_modules" }, "dependencies": { "@aws-sdk/client-s3": "^3.85.0", "@aws-sdk/lib-storage": "^3.85.0", "sharp": "^0.30.4" }, "devDependencies": { "@types/aws-lambda": "^8.10.95", "@types/node": "^16.11.36", "@types/sharp": "^0.30.2", "rimraf": "^3.0.2", "typescript": "^4.7.2" } }

Now all you have to do is call npm run create-release-zip.

Terraform code

Terraform is a tool from Hashicorp that helps declaratively manage the infrastructure. In this case, you do not have to manually create instances, networks, etc., in the console of your cloud provider; it is enough to write a configuration that will outline how you see your future infrastructure. This configuration is created in human-readable text format. If you want to change your infrastructure, then edit the configuration and run terraform apply. Terraform will route API calls to your cloud provider to align the infrastructure with the configuration specified in this file.

If we transfer infrastructure management to text files, it opens up the opportunity to arm ourselves with all our favorite tools for managing source code and processes, after which we reorient them to work with the infrastructure. Now the infrastructure is subject to version control systems, just like the source code, it can be reviewed in the same way or rolled back to an earlier state if something goes wrong.

Now, it’s time to implement terraform code that will handle infrastructure deployment.

First provider definition:

terraform { required_version = "1.2.0" required_providers { aws = { source = "hashicorp/aws" version = "~> 4.16.0" } } } locals { name_prefix = "image-resize-test" default_tags = { Terraform = "true", } } provider "aws" { region = "eu-central-1" default_tags { tags = local.default_tags } } provider "aws" { // needed for Lambda Edge deployment as it must be in us-east-1 region alias = "global" region = "us-east-1" default_tags { tags = local.default_tags } }

Secondly the lambda:

locals { release_zip_path = "${path.module}/function/release.zip" // path to release zip } data "aws_iam_policy_document" "assume_role" { statement { actions = ["sts:AssumeRole"] principals { type = "Service" identifiers = ["lambda.amazonaws.com", "edgelambda.amazonaws.com"] } } } data "aws_iam_policy" "basic_execution_role" { arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole" } // provide required accesses to S3 for lambda data "aws_iam_policy_document" "s3_access" { statement { actions = ["s3:ListBucket"] resources = [aws_s3_bucket.main.arn] } statement { actions = [ "s3:GetObject", "s3:PutObject", ] resources = ["${aws_s3_bucket.main.arn}/*"] } } resource "aws_iam_policy" "s3_access" { name = "${local.name_prefix}-image-resize-lambda-s3-access" policy = data.aws_iam_policy_document.s3_access.json } resource "aws_iam_role" "image_resize_lambda" { name = "${local.name_prefix}-image-resize-lambda" assume_role_policy = data.aws_iam_policy_document.assume_role.json managed_policy_arns = [ data.aws_iam_policy.basic_execution_role.arn, aws_iam_policy.s3_access.arn, ] } resource "aws_lambda_function" "image_resize" { provider = aws.global // picking global aws provider to deploy it in us-east-1 region function_name = "${local.name_prefix}-image-resize" runtime = "nodejs16.x" architectures = ["x86_64"] memory_size = 2048 timeout = 10 handler = "dist/index.handler" filename = local.release_zip_path source_code_hash = filebase64sha256(local.release_zip_path) role = aws_iam_role.image_resize_lambda.arn publish = true }

And the last one - AWS S3 and CloudFront definition

resource "aws_s3_bucket" "main" { bucket = "${local.name_prefix}-cdn" } resource "aws_s3_bucket_acl" "main" { bucket = aws_s3_bucket.main.id acl = "private" } resource "aws_s3_bucket_public_access_block" "main" { bucket = aws_s3_bucket.main.id block_public_acls = true ignore_public_acls = true block_public_policy = true restrict_public_buckets = true } resource "aws_cloudfront_origin_access_identity" "main" { comment = "access-to-${aws_s3_bucket.main.bucket}" } data "aws_iam_policy_document" "main" { statement { actions = ["s3:GetObject"] resources = ["${aws_s3_bucket.main.arn}/*"] principals { type = "AWS" identifiers = [aws_cloudfront_origin_access_identity.main.iam_arn] } } } resource "aws_s3_bucket_policy" "main" { bucket = aws_s3_bucket.main.id policy = data.aws_iam_policy_document.main.json } resource "aws_cloudfront_distribution" "main" { comment = aws_s3_bucket.main.bucket enabled = true is_ipv6_enabled = true price_class = "PriceClass_100" origin { domain_name = aws_s3_bucket.main.bucket_regional_domain_name origin_id = aws_s3_bucket.main.id s3_origin_config { origin_access_identity = aws_cloudfront_origin_access_identity.main.cloudfront_access_identity_path } } viewer_certificate { cloudfront_default_certificate = true } default_cache_behavior { allowed_methods = ["GET", "HEAD", "OPTIONS"] cached_methods = ["GET", "HEAD"] compress = true target_origin_id = aws_s3_bucket.main.id viewer_protocol_policy = "redirect-to-https" min_ttl = 0 default_ttl = 3600 max_ttl = 604800 forwarded_values { query_string = false cookies { forward = "none" } } lambda_function_association { event_type = "origin-request" lambda_arn = aws_lambda_function.image_resize.qualified_arn } } restrictions { geo_restriction { restriction_type = "none" } } }

Created S3 has blocked any direct public access. The only way to fetch data is to use CloudFront.

Now, we can deploy infrastructure. Make sure to provide proper AWS credentials. Initialize terraform by terraform init. Then, we can apply the needed changes by terraform apply and confirm it by typing yes.

Results

https://{CLOUDFRONT_URL}cloudfront.net/some-img.jpg

https://{CLOUDFRONT_URL}cloudfront.net/some-img.jpg-200x200

https://{CLOUDFRONT_URL}cloudfront.net/some-img.jpg-50x50

Url ending with some-img.jpg-50x50 might look strange, but in MobileReality we are storing files in S3 without extension (we are using ContentType header) so this is not a problem in our case. Of course, you can justify this implementation to your needs. For example, this link could also be 50x50/some-img.jpg (if we would like to stick with jpg files). We hope that with all this information, you are ready to create compelling thumbnails, which will generate more clicks and views for your content.

And for sure depending on the tool you choose to create your thumbnails, you will have a few differences while using the editing program. But in general, the tools for creating thumbnails are intuitive, and you can always go back to our video to watch the step-by-step process for creating your images for videos.

Don’t forget that thumbnails should be aligned with your content. So, focus on sharing creative topics that add value to your audience and make users aware of your authority on the topics covered.

...